Footnote Detection

What does it take to understand a page visually at the machine level?

That is our guiding question and one that we are initially applying to the problem of detecting footnotes in a large corpus of German Enlightenment periodicals.

Why footnotes?

According to much received literary critical wisdom, one of the defining features of the Enlightenment is not only a growth in periodicals — more printed material circulating about in more timely fashion than ever before – but also more footnotes or more broadly speaking more indeces – that is, more print that points to print. As the world of print became increasingly heterogenous and faster paced, print evolved mechanisms for indexing and pointing to this increased amount of material to make it intelligible. Indexicality, as my colleague Chad Wellmon has argued, is one of the core features of Enlightenment.

Below I give some idea of the process of what it means to identify footnotes through a process of what we call visual language processing. This is work that has been produced by the stellar efforts of the members of the Synchromedia Lab at Montréal’s ETS directed by Mohamed Cheriet. These members include: Ehsan Arabnejad, Youssouf Chherawala, Rachid Hedjam, and Hossein Ziaei Nafchi.

Step 0: Know thy page.

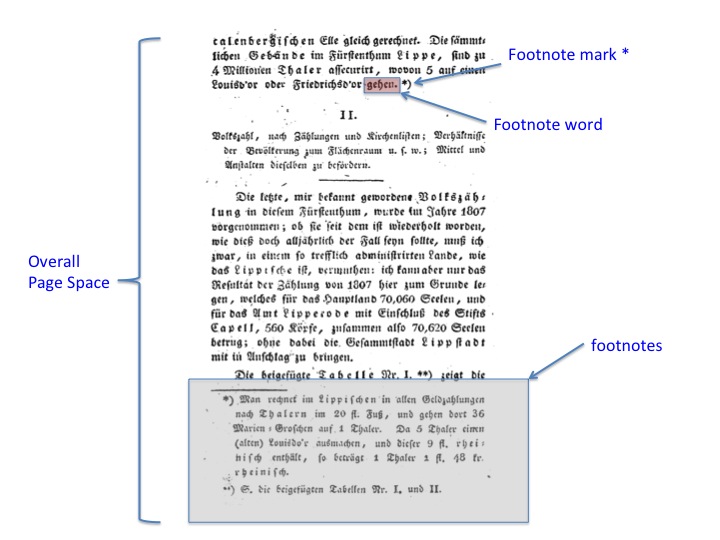

Here is an image of a sample page. This gives some indication of the overall layout and quality of the images. The good news is that unlike manuscripts, these pages are very regular. The bad news is that regular is a relative term. The very bad news is the quality of the reproduction.

Step 0.1: Hypotheses

To begin, the humanist team member (in this Andrew Piper) identified four possible features in advance of the project that he thought might be computationally identifiable and would also be of interest to literary historians. These are:

- Footnotes per page. If the thesis is that Enlightenment = indexicality, then we should find more footnotes as time progresses in our data set. The first, most basic question is, how many footnotes are there in this data and can we get a per page measure?

- Footnoted Words. Can we capture the words that are most often footnoted, and if so, what can this tell us about Enlightenment concerns? When authors footnote, do these cluster around common semantic patterns?

- Footnote Length. Does length matter? Are longer footnotes indicative of a certain paratextual style that is useful for grouping documents into categories? When authors use long versus very short footnotes, do they tend to be working in similar genres?

- Language Networks. Can we detect which language is being referred to in the footnote? Knowing the degree to which footnotes become more/less internationally minded as the Englightenment progresses into the nineteenth century will give us an indication of the natioanalization of print discourse during this period. Because German uses different type faces for Latin and German scripts we should be able to identify at least these distinctions (with perhaps Greek and Arabic added in).

- Citation Networks. This is the holy grail: can we extract the references that are cited in the footnote? Can we construct a citation network for our our periodicals, a record of all the references that are mentioned in German periodicals over an eighty-year period to better understand the groupings and relationships that print articulates about itself?

Step 1: Enhance images

Once these hypotheses were in place, the next step was to prepare a sample set of pages for detection. This involves two steps:

First, try to repair the incompleteness of the reproduction by focusing on a) reducing background noise and b) enhancing the stroke completion of the letters.

a) Reducing background noise

b) Enhancing holes in strokes

Second, pages are not all uniformly aligned. So we need to correct for skew in order to perform word segmentation and line measurements (and remove shadows from the OCR process).

Step 2: Footnote Markers

Once the pages have been processed, we now search for our first feature. Can we reliably find footnote markers? In modern texts these are usually numbers, in the periodicals they were most often asterisks *, or two **. These three slides should give a good idea of the task and the challenges. The results on our sample set for accurately detecting footnote markers were:

Precision = 85.58% and Recall = 71.65%.

Step 3: Footnoted Words

Next we began looking to see if we could capture just those before the footnote mark. Is there something common to the footnote vocabulary of the German Enlightenment?

Step 4: Footnote Length

Next, we concerned ourselves with trying to identify the length of footnotes. This involved a process of line segmentation instead of word segmentation.

Step 5: Language Detection

Finally, we began to explore how we could identify words in different languages. We found a very interesting way of finding it at the level of the line by comparing the distribution of letter heights. It turns out that German and Arabic script, for example, have very different emphases on up and down strokes. We are still working on trying to understand this at the level of the single word or phrase.

Step 6: Citation Network

Our last very high level question is: can we detect the words that indicate titles to which footnotes are referencing. This is a much more complex problem. To be continued…