Just Review, a student led project on gender bias in book reviewing

For years, women have been aware that their books are less likely to get reviewed in the popular press and they are also less likely to serve as reviewers of books. Projects like VIDA and CWILA were started to combat this kind of exclusion. Over time they have managed to make some change happen in the industry. Although nowhere near parity, more women are being reviewed in major outlets than they were five or ten years ago.

Just Review was started in response to the belief that things were getting better. Just because you have more female authors being reviewed doesn’t mean those authors aren’t being pigeon-holed or stereotyped into writing about traditionally feminine topics. “Representation” is more than just numbers. It’s also about the topics, themes, and language that circulates around particular identities. In an initial study run out of our lab, we found there was a depressing association between certain kinds of words and the book author’s gender (even when controlling for the reviewer’s gender). Women were strongly associated with all the usual tropes of domesticity and sentimentality, while men were associated with public facing terms related to science, politics and competition. It seemed like we had made little progress from a largely Victorian framework.

To address this, we created an internship in “computational cultural advocacy” in my lab focused on “women in the public sphere.” We recruited five amazing students from a variety of different disciplines and basically said, “Go.”

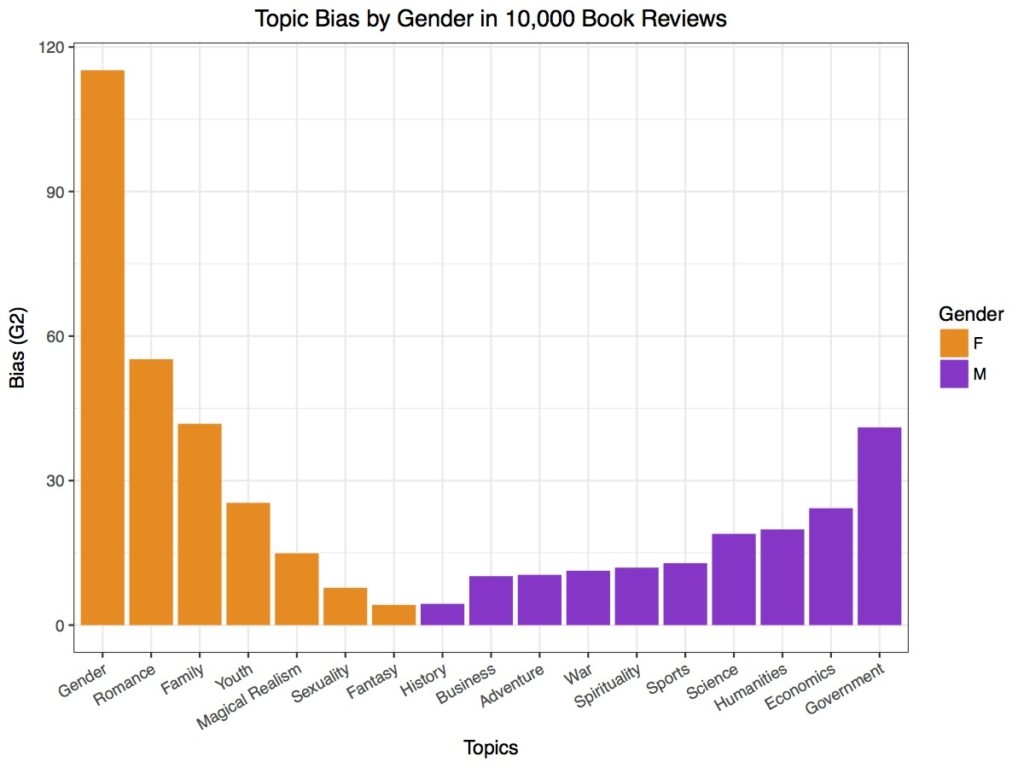

The team of Just Review set about understanding the problem in greater detail, working together to identify their focus more clearly (including developing the project title Just Review) and reaching out to stakeholders to learn more about the process. By the end, they created a website, advocacy tools to help editors self-assess, recommendations for further reading, and a computational tool that identifies a book’s theme based on labels from Goodreads.com. If you know the author’s gender and the book’s ISBN (or even title), you can create a table that lists the themes of the books reviewed by a website. When we did this for over 10,000 book reviews in the New York Times, we found that there are strong thematic biases at work, even in an outlet prized for its gender equality.

Beyond the important findings that they have uncovered, the really salient point about this project is the way it has been student-led from the beginning. It shows that with mentoring and commitment young women can become cultural advocates. They can take their academic interests and apply them to existing problems in the world and effect change. There has been a meme for awhile in the digital humanities that it is a field alienating to women and feminists. I hope this project shows just how integral a role data and computation can play to promote ideals of gender equality.

We will be creating a second iteration in the fall that will focus on getting the word out and tracking review sites more closely with our new tools. Congratulations to this year’s team who have made a major step in putting this issue on the public’s radar.

1 Comment

Join the discussion and tell us your opinion.

[…] to our lab. While we often focus on the analytical functions of algorithms (identifying things like gender bias in book reviews, prestige bias in academic publishing, or nostalgia bias in prizewinning literature), it is […]