The Covid That Wasn’t: Counterfactual Journalism Using GPT

Very excited to announce a new student-led paper out in the ACL Proceedings as part of the SIGHUM workshop. The project was designed and carried out by Sil Hamilton, MA student in Digital Humanities at McGill, and provides a great insight into how we can use new large language models to study human behaviour.

Sil’s question was essentially: given a language model that had never heard of Covid, what would it say if it were prompted with headlines in the news?

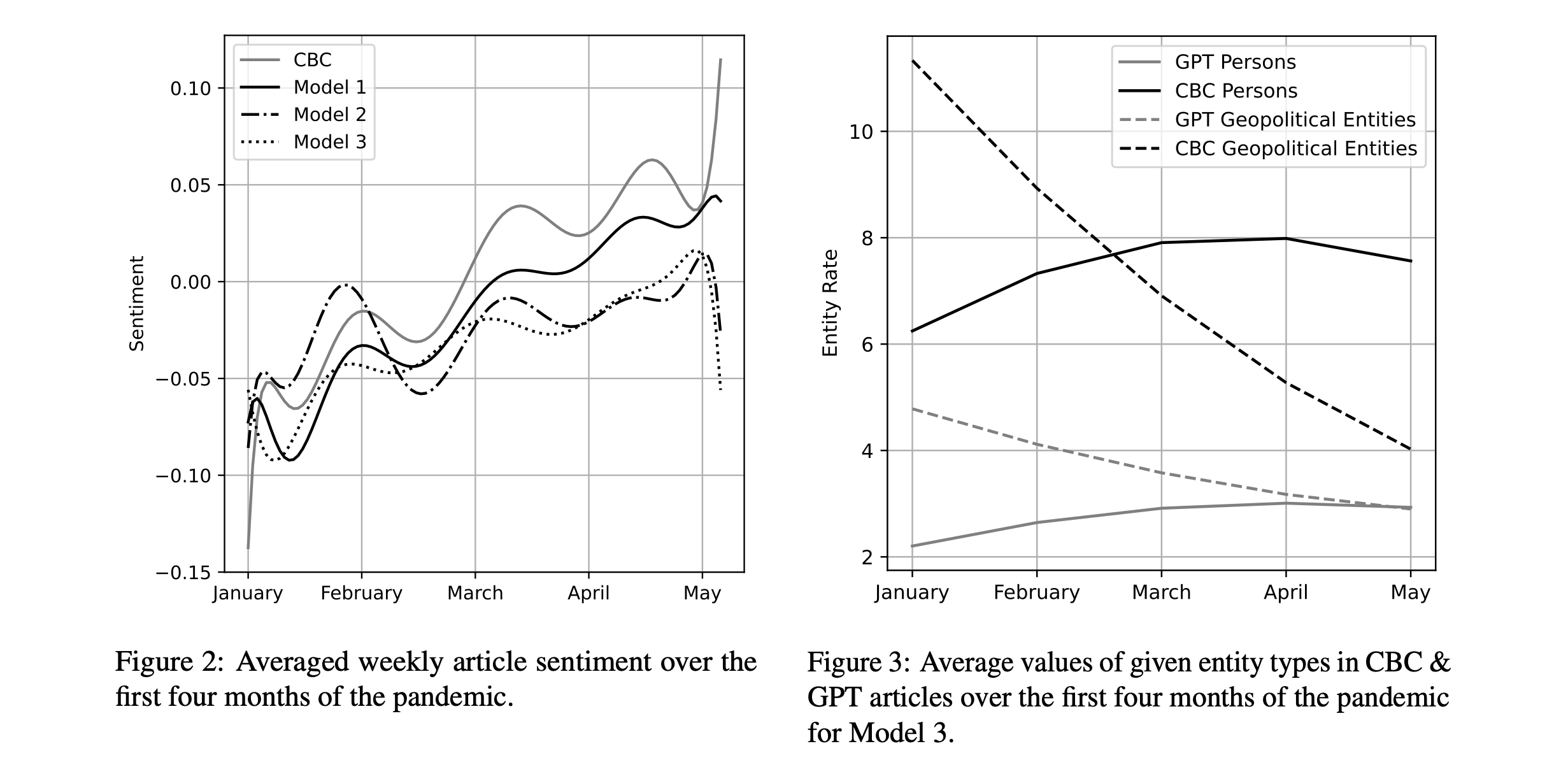

To do this, he used a collection of CBC articles over the first four months of the pandemic and fine-tuned GPT-2 to generate thousands of “artificial” CBC articles using the headlines of actual articles as prompts. Then he compared the simulated corpus with the actual corpus to observe differences. What would GPT say about Covid that journalists did not?

The findings were indeed interesting.

Sil found that GPT was consistently far more negative than CBC, i.e. it saw more of an emergency at hand, a fact that was not the case when he ran this same process on non-Covid articles (GPT isn’t just more negative by nature, but actually the opposite). It also interpreted the event in more bio-medical terms, i.e. as a health emergency, whereas the CBC tended to focus more on geo-political framing and high profile people.

As we discuss in the article, we thus see 3 main “biases” of CBC reporting through the lens of our computational models:

- Rally around the flag effect — being more positive than expected given a major health crisis

- Early geo-political framing

- Person- rather than disease-centred reporting

We think that language models and approaches to text simulation can play an important role in assessing past human behaviour when it comes to media, creativity and communication. While a lot of work has been spent trying to understand the biases inherent in language models, we also see an opportunity to use language models to shine a light on the biases of human expression.

This is just the tip of the iceberg of beginning to get a handle on what language models can tell us about ourselves.